Docker best practices help improve the overall security, efficiency, manageability, stability, and scalability of your Dockerized applications. While Docker aims to make things simple, adopting good practices from the start will save you headaches down the road as your deployment grows.

There are a few main reasons for following best practices in Docker:

Security - Following secure practices like isolating services, exposing only necessary ports, and properly labeling containers helps reduce the overall attack surface and make your Docker deployment more secure.

Efficiency - Practices like minimizing layers, using multi-stage builds, and leveraging Docker's cache can make your Docker builds and deployments much more efficient and faster.

Manageability - Labeling containers, pruning unused data, and configuring health checks make it easier to manage and monitor your Dockerized applications.

Stability - Logging to stdout instead of files, monitoring disk space usage, and isolating services can help ensure the stability of your Dockerized applications.

Scalability - Many of the practices like isolating services, minimizing layers, and pruning unused data help improve the scalability of your Docker deployment.

Some of the Docker best practices

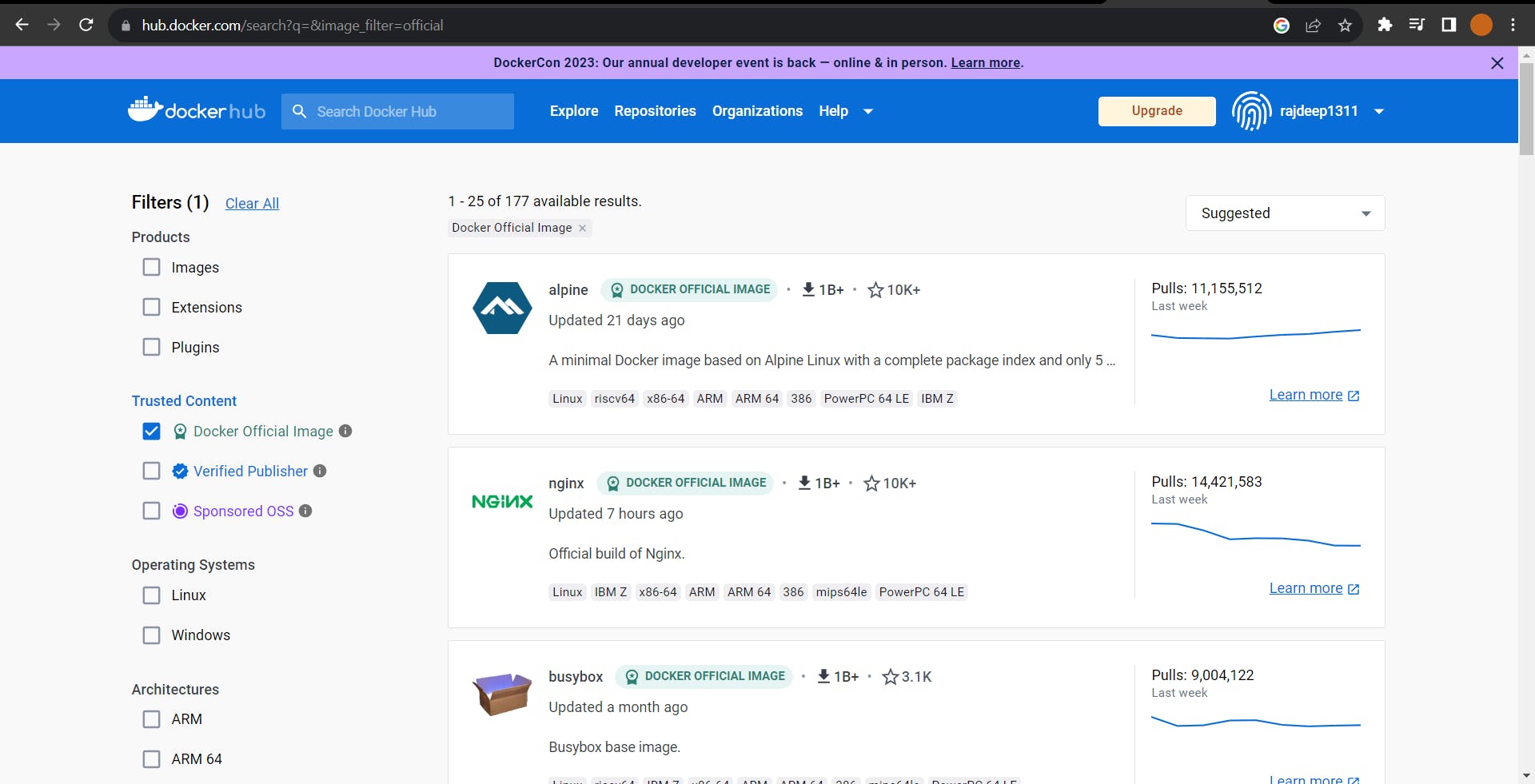

Use the Official Docker Image as the base image - Using an official Docker image as the base image for your Dockerfile provides a trustworthy, secure, stable, and well-supported starting point for your container. Docker's layer caching means that when you use an official image as the base, any layers that haven't changed will be cached. This improves build performance. It is considered a best practice over rolling your custom base image whenever possible.

Use specific Docker image version - By pinning to an exact version, you gain the benefits of reproducibility, stability, and security at the cost of having to manually update the version in your Dockerfile for each new release. But this trade-off is often worthwhile, especially for production images.

A good Dockerfile would specify an image like this:

FROM ubuntu:16.04

Rather than:

FROM ubuntu

Use a small-sized official image - By choosing the smallest possible official Docker image, you gain all the benefits of official images while also keeping your final image as small and lightweight as possible. Official images designed to be small tend to have a smaller attack surface since they include less software and dependencies. This reduces the potential for security vulnerabilities. Smaller images start up faster since there is less to initialize and load. This improves the performance of your container. So when choosing an official Docker image as your base image, you should:

Check if a smaller alternative exists for your language or framework

Avoid images with "full" or "complete" in the name, as these tend to be larger

Only install the minimum required packages and dependencies in your Dockerfile

Prune unused layers from the image

For a different alternative, you can use the SlimtoolKit for reducing the Docker images but that is a whole new game.

Optimize caching image layers - By optimizing how you leverage Docker's layer caching, you can significantly improve the build performance of your Docker images. Ordering instructions carefully, merging commands, and minimizing layers are all important techniques.

- Order instructions from least to most changing - Place commands that change the least frequently first in the Dockerfile. This maximizes the amount of caching for subsequent builds.

For example:

FROM ubuntu

RUN apt-get update

RUN apt-get install -y curl

The FROM command rarely changes, so it's first. The apt-get update command changes more often, so it's second.

- Merge install commands - Combine installing multiple packages into a single install command to cache the apt-get update layer.

For example:

RUN apt-get update \

&& apt-get install -y curl vim

Avoid reinstalling dependencies - Only install packages when needed to avoid reinstalling on every build.

Use ARG instructions - ARG instructions are cached, but ENV is not.

For example:

ARG VERSION

RUN install.sh $VERSION

Use .dockerignore file - Using a .dockerignore file is an easy but effective Docker best practice to improve the performance, security, and simplicity of your Docker images. The .dockerignore file is portable and can be checked into source control. This ensures consistency across environments. Changes to ignored files do not invalidate cached layers, improving build performance.

A good .dockerignore file might look something like this:

# Ignore temp files

*.tmp

# Ignore credentials

*.pem

# Ignore node_modules folder

node_modules/

# Ignore build artifacts

build/

dist/

This will ignore:

All .tmp files

All .pem files

The node_modules folder

The build and dist folders

Make use of multi-stage builds - Multi-stage builds are an extremely effective Docker best practice to create optimized images. They improve the build performance, image size, and security of your Docker images. Each stage can focus on a single task like building, testing, or running. This separates build-time from run-time dependencies.

A basic multi-stage Dockerfile example:

FROM golang:1.7.3 as builder

RUN go get -d -v golang.org/x/net/html

COPY . /go/src/github.com/you/app

WORKDIR /go/src/github.com/you/app

RUN go install -v

FROM alpine:latest

RUN apk --no-cache add ca-certificates

WORKDIR /root/

COPY --from=builder /go/bin/app .

CMD ["./app"]

Use the least privileged user - Running your Docker container as a non-root user is an important security best practice. It limits the potential damage from vulnerabilities, adheres to the principle of least privilege, and makes the container a less attractive target for attackers.

Here's an example Dockerfile that runs as a non-root user:

FROM ubuntu

RUN groupadd -r myuser && useradd -r -g myuser myuser

USER myuser

COPY . /app

WORKDIR /app

RUN make install

CMD ["/app/myprogram"]

**Scan your images for security vulnerabilities -**Regularly scanning your Docker images for known vulnerabilities is an important security best practice. It allows you to find and fix issues before they are exploited, improve your image security posture, and meet compliance standards. Always check for updates for security patches to keep the malware and threats at bay.

Conclusion

Following these Docker security best practices can help:

Detect vulnerabilities proactively

Limit the impact of vulnerabilities if exploited

Reduce the likelihood your images will be targeted by attackers

Meet compliance requirements

The key thing is to implement a combination of approaches:

Secure image-building practices

Scanning to detect issues

Fixing issues promptly

Limiting what containers can access at runtime

By adopting these Docker security best practices, you can significantly improve the security posture of your Docker deployment and minimize risks.